Ethics in AI

What are Ethics?

In other words, the “rules” or “decision paths” that help determine what is good or right. Given this one may say that the ethics of tech is simply the set of “rules” or “decision paths” used to determine its “behavior”. After all, isn’t technology always intended for good or right outcomes?

Software products, when designed and tested well, do arrive at predictable outputs for predictable inputs via such a set of rules or decision paths.

But how does the team determine what is a good or right outcome, and for whom? Is it universally good or only for some? Is it good under certain contexts and not in others? Is it good against some yardsticks but not so good for others? These discussions, the questions and the answers chosen by the team are critical. Therein lies ethics.

Why Ethics?

Imagine a machine learning model is trained to predict an image. It’s huge, complex, and takes months of training over tons of data on expensive computers; but once that’s done it’s easy to use.

If AI can generate human-like output can it also make human-like decisions? Spoiler alert: yes it can, it already is making. But is human-like good enough? What happens to trust in a world where machines generate human-like output and make human-like decisions? Can we trust an autonomous vehicle to have seen us? Can we trust the algorithm to be fair? Can we trust the AI in Healthcare enough to make life and death decisions for us?

As people of technology, we must flip this around and ask: How can we make algorithmic systems trustworthy?

Enter Ethics.

Ethics in AI

So far, we understand ethics, why technology is not neutral, and what implications this has. But how do we apply this to AI, wherein the path from inputs to outputs is neither visible nor obvious; where the output is not a certainty but merely a prediction?

In other words, the ethics of AI lies in the ethical quality of its prediction, the end outcomes drawn out of that, and the impact it has on humans.

Issues that point to either the ethical quality of the predictions, end outcomes, or their direct and indirect impact on humans can be summarized in categories.

Importance of Training Data

Let’s take an example. In your Rock Paper Scissor model, try displaying the paper pose with fingers apart and fingers closed like this:

If you are not getting this error, then you have trained your model correctly. If you haven’t, you also missed this part!

According to the model, for the computer to identify signs as paper, you should have an object with 5 fingers separated and for the rock, you need a single solid. That’s why, when we have all the fingers closed, the machine identifies it as Rock.

There are other things as well which makes the model inaccurate:

- Hand positions: Different hand and different positions like upside down.

- Backgrounds: If the background changes the model will find it difficult to relate the image from the class.

- The hand is too close or too far: If you try to make these actions too close or too far from the camera, then also errors can creep up.

There is only one solution to this problem: train the model with more variety of training data. More training data means a more accurate model.

Next Session

Session 8

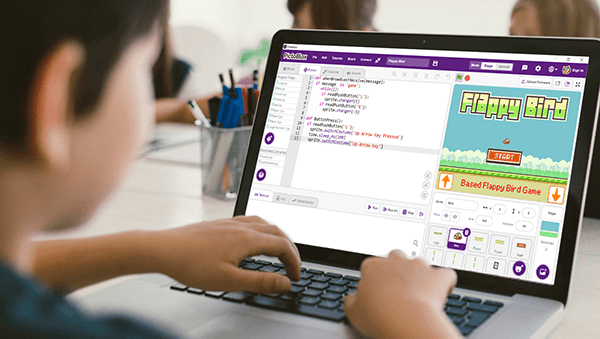

Basics of Python

- Introduction to python

- Different types of operator

- Input and output functions